Understanding a summation of assembled knowledge in support of solving a complex problem is hard. We’ve all struggled to organize and communicate our reasoning using mind maps, flowcharts, evidence boards, or the ever-ubiquitous whiteboard. Large language models (LLMs) will alleviate some of these difficulties by analyzing collections of text in ways previously impossible. However, our experience suggests that humans benefit significantly from conceptualization models that can’t be understood or internalized with words alone. Fortunately, we can leverage LLMs to help with that too.

Over the last 20 years, Uncharted has developed many applications designed to help analysts sift through unstructured text to find clues, generate insights, and synthesize understanding of complex problems.

Interactive sense-making

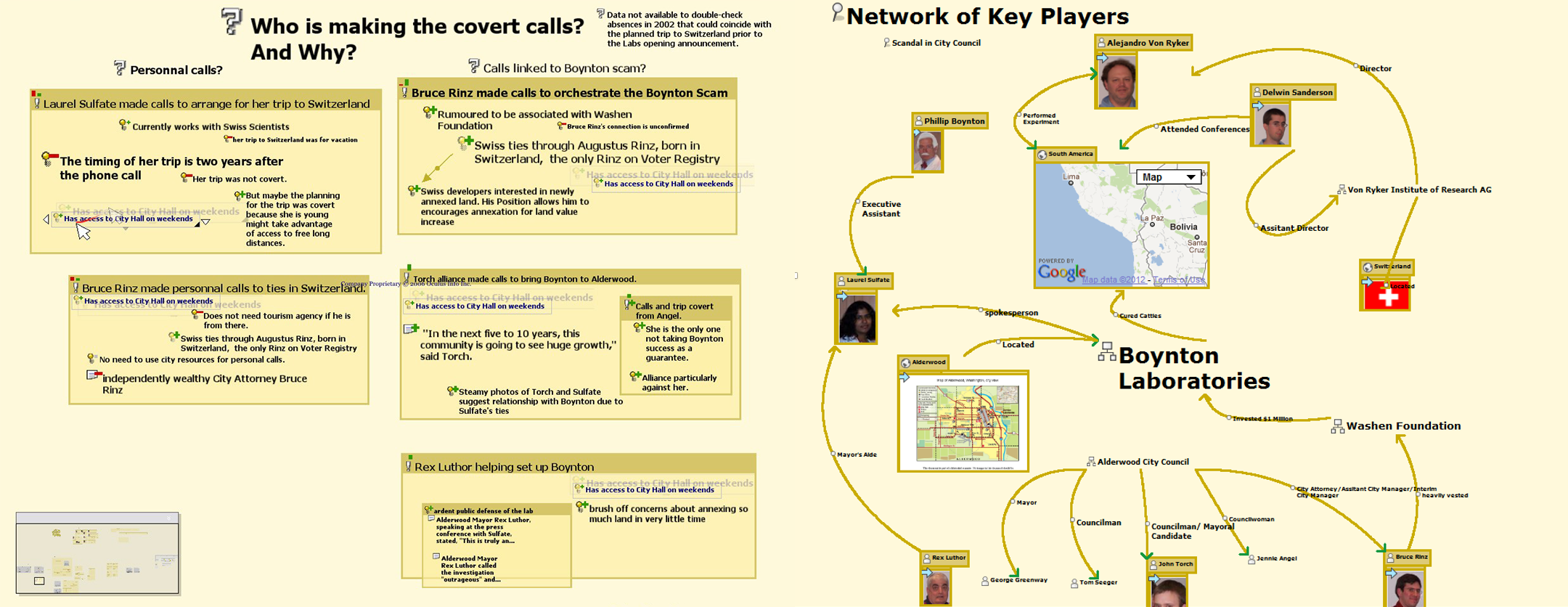

In the primitive days of online apps, we pioneered an early fully web-based drag-and-drop “Sandbox” environment for collecting, organizing, and connecting data from anywhere on the web. Unlike a sequential text narrative from an LLM, Sandbox was an interactive evidence board, with drag-and-drop, gesture-based grouping of media snippets, connection-drawing, “post-it” notes, and hypothesis-formation structures all intermingled into an evolving cognitive map. In a modern world LLMs could significantly enhance interactive sense-makings, using natural language post-its and connections to suggest new hypotheses or unexplored connections.

Analyst brainstorming and notes in the Sandbox were arranged, linked, and grouped into topics and meaningful patterns alongside supporting observations, evidence, and references. A landmark achievement of its time, this pioneering web app won several awards, including the VAST (Visual Analytics Science and Technology) Grand Challenge.

Structured reasoning

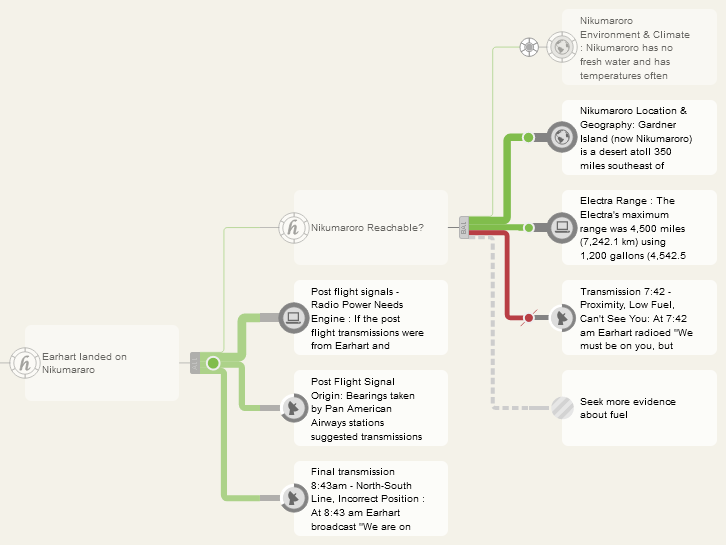

Analysts who want to carefully curate and test hypotheses and avoid biases often have more formal approaches for reasoning about unstructured data. Argument Mapper lets them hierarchically organize hypotheses with supporting and refuting evidence and successive levels of drill-down into propositions and supporting facts. Unlike a linear narrative produced by an LLM, structured reasoning provides a visual depiction of weak spots in arguments and gaps in evidence. Combining these two approaches may enable LLMs to reason about potential mismatches in evidence.

Argument Mapper visualizes evidence-based reasoning and logic flow to automatically evaluate hypotheses based on user assessments of credibility and relevance.

Exploration of state-of-the-art research

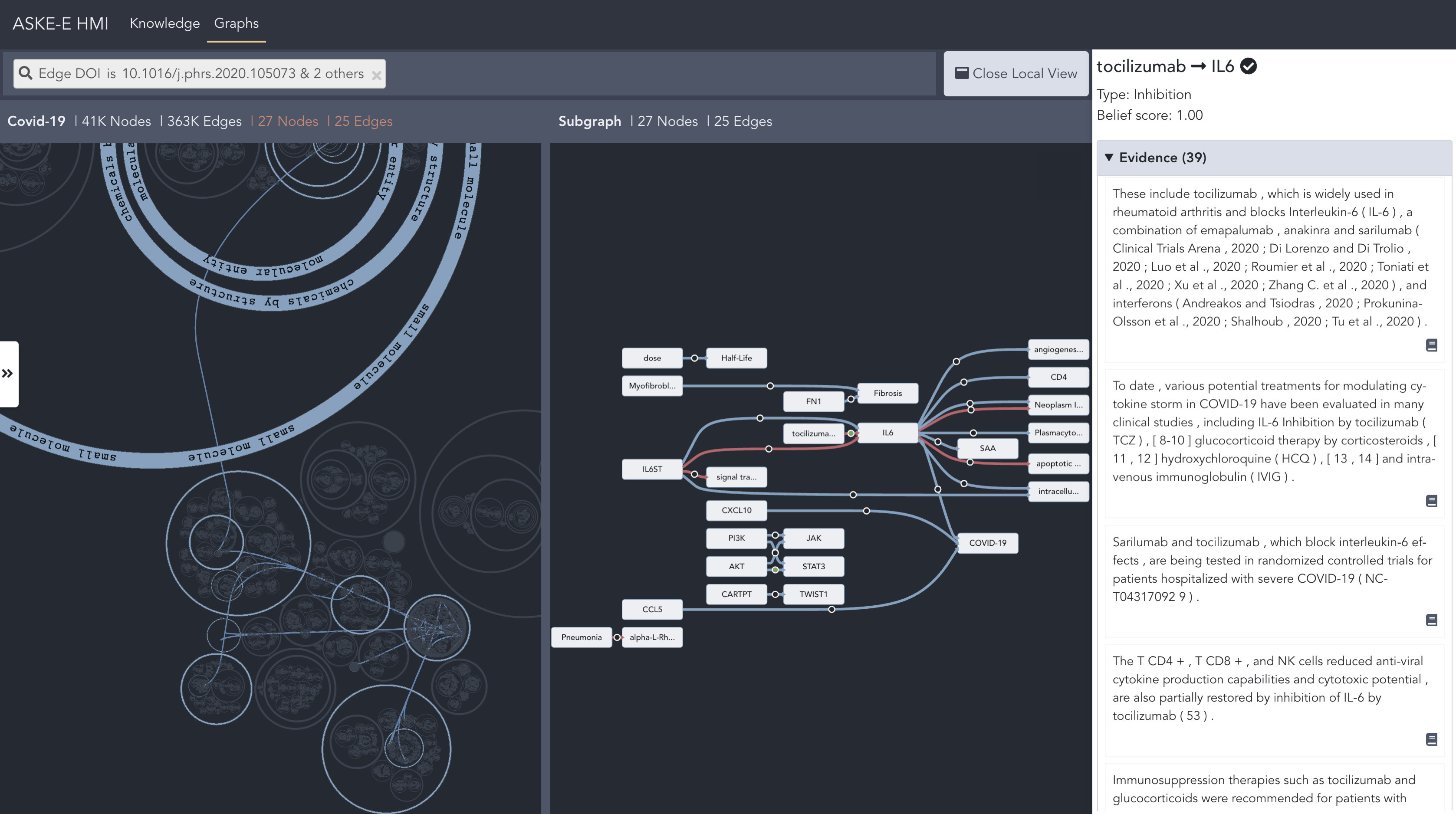

More recently, we’ve been extracting linkages across research papers and enabling global-to-micro analysis of all the linkages in a domain (such as biomechanisms for Covid-19). Users can see a global overview, explore the local neighborhood around an area of interest, access itemized lists of connections, find relevant sentences from source documents, and click-through to the original documents. This approach allows the researcher to quickly review the current state of the art in an emerging domain.

An investigation of potential drug treatments for SARS-CoV-2 using a COVID-19 biological graph automatically derived from literature.

Where we go from here

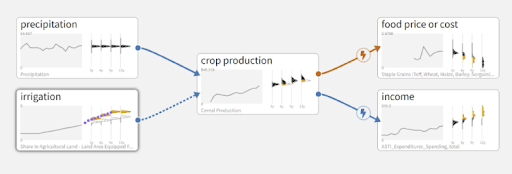

All of these applications augment human analysis of text with natural language processing (NLP) to automatically perform tasks such as identifying entities in text, clustering topics, revealing trends in sentiment and emotion, extracting positional statements or causal statements, and flagging abuses.

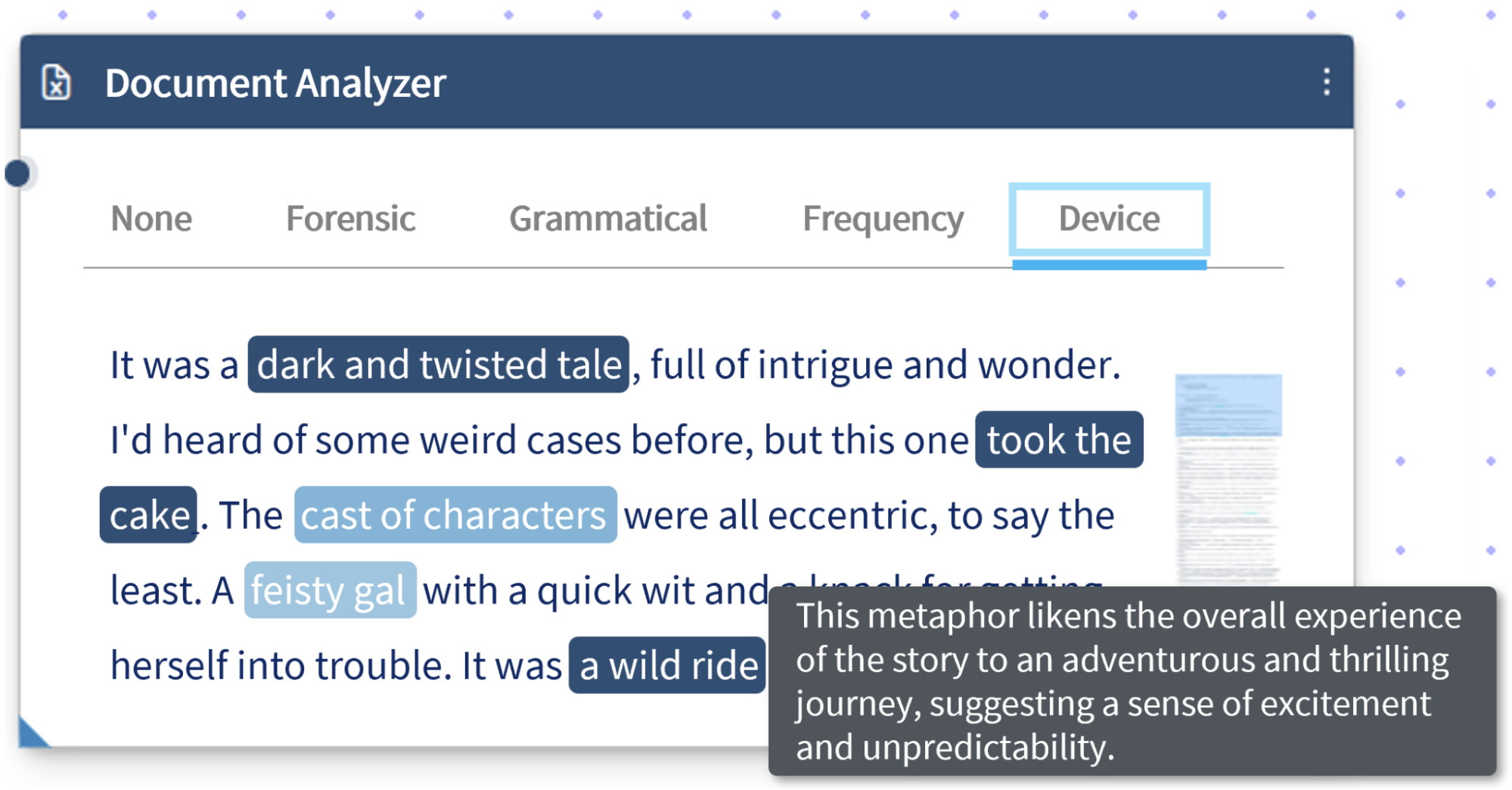

Now, we’re evolving our underlying analytics to incorporate LLMs such as ChatGPT, Cohere, and Bard. Previously impossible analytic capabilities such as metaphor detection or location of uncommon idioms are now feasible. We’re actively leveraging these capabilities to help make our users more effective; let the machine find the metaphor and let the analyst discern its impact in narrative context.

In our current linguistic analysis platform, this component for close reading leverages an LLM to identify and explain stylistic devices in context, thereby aiding fine-grain inspection of uncommon prose.

While LLMs are new, the analytic process in these examples remains unchanged: find clues, generate insights, and synthesize an actionable understanding. LLMs can provide an answer and an explanation, but words alone can’t provide all the rich context and conceptual modeling that is key to understanding. For complex problems, they’re just one more voice at the whiteboard, a new collaborator working to find solutions. At Uncharted, we’re leveraging our experience in machine-generated communication with graphics and text to create a next generation of sense-making, modeling and reasoning workspaces that optimize the interaction between LLMs and humans.

More about the examples cited above: